Rendering Markdown into HTML using PHP

3rd December 2022One of the good things about using virtual private servers for hosting websites in preference to shared hosting or using a web application service like WordPress.com or Tumblr is that you get added control and flexibility. There was a time when HTML, CSS and client-side scripting were all that was available from the shared hosting providers that I was using. Then, static websites were my lot until it became possible to use Perl server side scripting. PHP predominates now, but Python or Ruby cannot be discounted either.

Being able to install whatever you want is a bonus as well, though it means that you also are responsible for the security of the containers that you use. There will be infrastructure security, but that of your own machine will be your own concern. Added power always means added responsibility, as many might say.

The reason that these thought emerge here is that getting PHP to render Markdown as HTML needs the installation of Composer. Without that, you cannot use the CommonMark package to do the required back-work. All the command that you see here will work on Ubuntu 22.04. First, you need to download Composer and executing the following command will accomplish this:

curl https://getcomposer.org/installer -o /tmp/composer-setup.php

Before the installation, it does no harm to ensure that all is well with the script before proceeding. That means that capturing the signature for the script using the following command is wise:

HASH=`curl https://composer.github.io/installer.sig`

Once you have the script signature, then you can check its integrity using this command:

php -r "if (hash_file('SHA384', '/tmp/composer-setup.php') === '$HASH') { echo 'Installer verified'; } else { echo 'Installer corrupt'; unlink('composer-setup.php'); } echo PHP_EOL;"

The result that you want is “Installer verified”. If not, you have some investigating to do. Otherwise, just execute the installation command:

sudo php /tmp/composer-setup.php --install-dir=/usr/local/bin --filename=composer

With Composer installed, the next step is to run the following command in the area where your web server expects files to be stored. That is important when calling the package in a PHP script.

composer require league/commonmark

Then, you can use it in a PHP script like so:

define("ROOT_LOC",$_SERVER['DOCUMENT_ROOT']);

include ROOT_LOC . '/vendor/autoload.php';

use League\CommonMark\CommonMarkConverter;

$converter = new CommonMarkConverter();

echo $converter->convertToHtml(file_get_contents(ROOT_LOC . '<location of markdown file>));

The first line finds the absolute location of your web server file directory before using it when defining the locations of the autoload script and the required markdown file. The third line then calls in the CommonMark package, while the fourth sets up a new object for the desired transformation. The last line converts the input to HTML and outputs the result.

If you need to render the output of more than one Markdown file, then repeating the last line from the preceding block with a different file location is all you need to do. The CommonMark object persists and can be used like a variable without needing the reinitialisation to be repeated every time.

The idea of building a website using PHP to render Markdown has come to mind, but I will leave it at custom web pages for now. If an opportunity comes, then I can examine the idea again. Before, I had to edit HTML, but Markdown is friendlier to edit, so that is a small advance for now.

Tidying dynamic URL’s

15th June 2007A few years back, I came across a very nice article discussing how you would make a dynamic URL more palatable to a search engine and I made good use of its content for my online photo gallery. The premise was that URL’s that look like that below are no help to search engines indexing a website. Though this is received wisdom in some quarters, it doesn’t seem to have done much to stall the rise of WordPress as a blogging platform.

http://www.mywebsite.com/serversidescript.php?id=394

That said, WordPress does offer a friendlier URL display option too and you can see this in use on this blog; they look a little like the example URL that you see below, and the approach is equally valid for both Perl and PHP. I have been using the same approach for the Perl scripts powering my online phone gallery and now want to apply the same thinking to a gallery written in PHP:

http://www.mywebsite.com/serversidescript.pl/id/394

The way that both expressions work is that a web server will chop pieces from a URL until it reaches a physical file. For a query URL, the extra information after the question mark is retained in its QUERY_STRING variable while extraneous directory path information is passed in the variable PATH_INFO. For both Perl and PHP, these are extracted from the entries in an array; for Perl, this array is called is $ENV and $_SERVER is the PHP equivalent. Thus, $ENV{QUERY_STRING} and $_SERVER{‘QUERY_STRING’} traps what comes after the “?” while $ENV{PATH_INFO} and $_SERVER{‘PATH_INFO’} picks up the extra information following the file name (the “/id/394/” in the example). From there on, the usual rules apply regarding cleaning of any input but changing from one to another should be too arduous.

More Linux Distributions

21st September 2012

If a certain Richard Stallman had his way, Linux would be called GNU/Linux because he wants GNU to have some of the credit, but we’re lazy creatures and we all call it Linux instead. What still amazes me is the number of Linux distributions that are out there. This list captures those that do not fit into other lists that you can find in the sidebar, so do look at the others as well.

Many fit into the desktop and server computing paradigms while a minority are very distinctive. It is easier to write about the latter than the former, though personal experiences do add to any narrative. It is tempting to think that everything has become static after more than thirty years, yet that may be foolish given the ongoing flux in the world of technology. Only change is ever a constant presence.

More in the Way of Privacy

The controversy about security agencies eavesdropping on internet communications has upset some and here are some distros offering anonymity and privacy. Of course, none of these should be used for unlawful purposes since there are those in less liberal countries who need invisibility to speak their minds.

It is harder and harder to create a Linux distro that is very different from the rest, but this one uses application virtualisation for added security. You can organise your software into different domains so that you work more securely when moving data between applications from different domains.

There is more than a hint of privacy-mindedness in this distro when you look long enough at what it offers. Cinnamon, MATE and Xfce desktop environments are part of the offer and there is added software for extra privacy and security.

This is an option for those who are worried about being tracked online. All internet connections are sent via the Tor network and it is run exclusively as a live distro from CD, DVD or USB stick drive too, so no trace is left on any PC. The basis is Debian and the distro’s name is an acronym: The Amnesiac Incognito Live System. For us living in a democratic country, the effort may seem excessive but that changes in other places where folk are not so fortunate. The use of Tor may not be perfect but it should help in combination with the use of different sessions for different tasks and encrypting any files. There even is an option to make the desktop appear like that of Windows XP for extra discreteness of use.

Most Linux distros that have enhanced security and anonymity as a feature are not installable on a PC, but that exactly is what’s unique about Whonix. It’s based on Debian but all internet connections go via the Tor network. The latter is called Whonix-Gateway with Whonix-Workstation being what you use to work on your system. It may sound like being overly careful but it has me intrigued.

Entertainment

In many ways, these are appliance distros for anyone who just wants an install-it-and-go approach to things. That works better with dedicated devices than with multipurpose machines, so that is one thing that needs to be kept in mind.

The idea behind this offering is what it offers console gamers. Legacy games and peripherals will work and there even is support for Raspberry Pi as well.

The main purpose of this distro is to offer a home for the KODI entertainment centre on PC and Raspberry Pi devices. It follows from the now defunct OpenELEC project, which ran into trouble when developers’ voices were not given a hearing.

The acronym stands for Open-Source Media Centre and there is KODI here too. Though the distro also is based on Debian, one is tempted to wonder why anyone would not just install that and install KODI on top of it. The answer possibly has something got to do with added user-friendliness for those who do not need to deal with such things.

Mandriva Offshoots

Mandrake once was a spin of Red Hat with a more user-friendly focus. In the days before the appearance of Ubuntu, it would have been a choice for those not wanting to overcome obstacles such as a level of hardware support that was much less than what we have today. Later, Mandrake became Mandriva following litigation and the acquisition of Conectiva in 2005. The organisation has declined since those heady days and it became defunct during 2015. Its legacy continues though in the form of two spin-off projects, so all the work of forebears has not been lost.

It was the uncertainty surrounding the future of Mandriva that originally caused this project to be started. Beginnings have been promising, so this is a one to watch, though you have to wonder if the now community-based OpenMandriva is stealing some of its limelight.

Of the pair that is listed here, it is OpenMandriva which is a continuation of the now-defunct Mandriva. Seeing how things progress for a project with user-friendliness at its heart will be of interest in these days when Debian, Ubuntu and Linux Mint are so pervasive. Even with those, there are KDE options, so there is a challenge in place.

Anything Russian may not be everyone’s choice given the state of world affairs at the time of writing, yet this still is an offshoot of Mandriva so it gets a mention in this list. Desktop environment options include KDE, XFCE and LXQt and there are various use cases covered by a range of solutions.

Others

Not every distro falls in the above categories, and some that you find here may surprise you. There are some better-known names like openSUSE that go their way.

Aside from the founder’s dislike of ISO disk images for whatever reason, this distro has its own eccentricities. For example, it is container-friendly, runs in memory as root and much more. This is branded as an experimental distro, and it is that in many ways.

This project creates respins of openSUSE for the sake of a more refined experience. For instance, there are live booting ISO images as well as inclusion of media codecs. There is plenty of choice too when it comes to desktop environments.

From what I have seen, this project seems to be supporting the same needs as Arch, albeit with all software needing to be compiled, so there’s more of a DIY approach. The wiki also comes in handy for those users.

Billing itself as a lean independent distribution focussing on QT and KDE, this is built from the ground up without any dependence on other distros. Some tools, like pacman, naturally come from elsewhere in this otherwise standalone offering.

Here is another distro apart from Ubuntu that has an African name, the Zulu for big chief this time around. It came to my notice among the pages of the now defunct Micro Mart magazine and uses MATE, XFCE, Enlightenment and KDE as its desktop environment choices.

SuSE Linux was one of the first Linux distros that I started to explore and I even had it loaded on my home PC as a secondary operating system for quite a while too before my attention went elsewhere. Only for a PC Plus cover-mounted CD, it never might have discovered it and it bested Red Hat, which was as prominent then, as Fedora is today. When SuSE fell into Novell’s hands, it became both openSUSE and SuSE Linux Enterprise Edition. The former is the community and the latter is what Novell, now itself an Attachmate Group company, offers to business customers. As it happens, I continue to keep an eye on openSUSE and even had it on a secondary PC before font resolution deficiencies had me looking elsewhere. While it’s best known for its KDE variant, there is a GNOME one too and it is this that I have been examining.

There was a time when this was being touted as an Ubuntu killer but it never seems to have made good on that promise. Recent troubles within the project haven’t helped either, especially with a long wait between releases.

This Turkish distro recently got reviewed in Linux Format and they were not satisfied with its documentation. It does not help that the website is not in English, so you need a translation tool of your choosing for this one.

Though there also is a spin using the MATE desktop environment, this distro is perhaps better known as the home for the Budgie desktop environment. All of this is for computing and not its business or enterprise counterpart. There is nothing to say against that and may make it feel a little more friendly.

The name sounded similar for some reason and I reckon that’s because Samsung has smartphones running Tizen on sale. The whole point of the project is to power mobile computing platforms with only the mention of netbooks sullying an otherwise non-PC target market that includes tablets and TV’s. It’s overseen by the Linux Foundation too.

A Look at a Compact System Camera

4th September 2013During August, I acquired an Olympus Pen E-PL5 and it is an item to which I still am becoming accustomed and it looks as if that is set to continue. The main reason that it appealed to me was the idea of having a camera with much of the functionality of an SLR but with many of the dimensions of a compact camera. In that way, it was a step up from my Canon PowerShot G11 without carrying around something that was too bulky.

Before I settled on the E-PL5, I had been looking at Canon’s EOS M and got to hear about its sluggish autofocus. That it had no mode dial on its top plate was another consideration though it does pack in an APS-C sized sensor (with Canon’s tendency to overexpose finding a little favour with me too on inspection of images from an well aged Canon EOS 10D) at a not so unappealing price of around £399. A sighting of a group of it and similar cameras in Practical Photography was enough to land that particular issue into my possession and they liked the similarly priced Olympus Pen E-PM2 more than the Canon. Though it was a Panasonic that won top honours in that test, I was intrigued enough by the Olympus option that I had a further look. Unlike the E-PM2 and the EOS M, the E-PL5 does have a mode dial on its top plate and an extra grip so that got my vote even it meant paying a little extra for it. There was a time when Olympus Pen models attracted my attention before now due to sale prices but this investment goes beyond that opportunism.

The E-PL5 comes in three colours: black, silver and white. Though I have a tendency to go for black when buying cameras, it was the silver option that took my fancy this time around for the sake of a spot of variety. The body itself is a very compact affair so it is the lens that takes up the most of the bulk. The standard 14-42 mm zoom ensures that this is not a camera for a shirt pocket and I got a black Lowepro Apex 100 AW case for it; the case fits snugly around the camera, so much so that I was left wondering if I should have gone for a bigger one but it’s been working out fine anyway. The other accessory that I added was a 37 mm Hoya HMC UV filter so that the lens doesn’t get too knocked about while I have the camera with me on an outing of one sort or another, especially when its plastic construction protrudes a lot further than I was expecting and doesn’t retract fully into its housing like some Sigma lenses that I use.

When I first gave the camera a test run, I had to work out how best to hold it. After all, the powered zoom and autofocus on my Canon PowerShot G11 made that camera more intuitive to hold and it has been similar for any SLR that I have used. Having to work a zoom lens while holding a dinky body was fiddly at first until I worked out how to use my right thumb to keep the body steady (the thumb grip on the back of the camera is curved to hold a thumb in a vertical position) while the left hand adjusted the lens freely. Having an electronic viewfinder instead of using the screen would have made life a little easier but they are not cheap and I already had spent enough money.

The next task after working out how to hold the camera was to acclimatise myself to the exposure characteristics of the camera. In my experience so far, it appears to err on the side of overexposure. Because I had set it to store images as raw (ORF) files, this could be sorted later but I prefer to have a greater sense of control while at the photo capture stage. Until now, I have not found a spot or partial metering button like what I would have on an SLR or my G11. That has meant either using exposure compensation to go along with my preferred choice of aperture priority mode or go with fully manual exposure. Other modes are available and they should be familiar to any SLR user (shutter priority, program, automatic, etc.). Currently, I am using bracketing while finding my feet after setting the ISO setting to 400, increasing the brightness of the screen and adding histograms to the playback views. With my hold on the camera growing more secure, using the dial to change exposure settings such as aperture (f/16 remains a favourite of mine in spite what others may think given the size of a micro four thirds sensor) and compensation while keeping the scene exactly the same to test out what the response to any changes might be.

While I still am finding my feet, I am seeing some pleasing results so far that encourage me to keep going; some remind me of my Pentax K10D. The E-PL5 certainly is slower to use than the G11 but that often can be a good thing when it comes to photography. That it forces a little relaxation in this often hectic world is another advantage. The G11 is having a quieter time at the moment and any episodes of sunshine offer useful opportunities for further experimentation and acclimatisation too. So far, my entry in the world of compact system cameras has revealed them to be of a very different form to those of compact fixed lens cameras or SLR’s. Neither truly get replaced and another type of camera has emerged.

Operating Systems – Mixing Closed and Open Source

28th November 2010Nowadays, I am a heavy user of Linux, but that was not always the case. My entry into computing was via macOS and Windows before I was exposed to UNIX during doctoral studies in Edinburgh. At the time, what I encountered felt like a comedown from what was offered by the others. My computing career has had a few false starts and this, along with encountering Fortran 77, was one that was overcome in the fullness of time.

There are a few reasons why those impressions of UNIX may not have been a fair impression. Low spending (or lack of funds) together with a lack of awareness of what was happening in personal computing at the time may have had their part to play too. They made for having archaic hardware and the user interfaces hardly were inspiring either. All this made the lure of PC technology all the stronger, perhaps slowing my academic progression as much as other distractions. In time, I made up for all that and gained my university degree.

As it happened, I was more accustomed to WYSIWYG and point-and-click ways of working; the attractions of working with computer code lay in the future. Thus, Microsoft Word suited my workflow more than LaTeX while the graphical output from OriginLab Origin looked far better than that from Unigraph. Working on computer terminals offering a two-bit X Window display felt utilitarian compared with even an 8-bit PC display, and there was better to be had in the PC world. Microsoft Windows machines were better for browsing the web too, even in an age when NSCA Mosaic and Netscape pervaded.

With my research degree offering up dark moments, I also took refuge in personal experimentation on my own Dell PC. Much of this involved Windows 9x and some missteps that impressed on me the importance of having good backups. Learning of the existence of Linux only took things further, especially on seeing it being used in a university lab and being attracted to the idea of getting a whole operating system free of charge.

Even being an impecunious university student did not stop me buying computing magazines and one sported an installation disk for a flavour of Linux that I no longer can recall. This was one experiment that did not go so well for me, offering some computer restoration practice in the process. A version that came with a book also featured and occupied some of my time looking at what could be done.

Eventually, the fascination with Linux would lead me to try out Red Hat and SuSE. By then though, I decided that experimenting on a spare PC was best and I now use virtual machines for the same task whenever I get to such things. Windows was retained on a separate machine for priority work like writing my thesis or looking for work, much as is the case for my current freelancing.

Moving away from Windows for home computing took a series of horrid experiences with Windows XP before inertia was overcome. By then, hardware support had become so much better that everything just worked. It was not like being unable to access the internet because of non-support for a software modem that needed Windows to function (those devices had become obsolete by then anyway and the one that I had was killed off by seasonal thunderstorm activity). Where once it was Linux that got virtualised, the same now applied to Windows; things had swapped. There may have been issues to resolve and new things to learn, but there were no showstoppers. It helps that a large user community means that solutions are there to be found somewhere on the web.

Linux Mint is my main operating system these days, mainly because its user focus means that things evolve with every release; experiments are best kept away from everyday usage. With the Windows desktop seeing undesirable changes at times, that has to be a good thing. Encountering big surprises on a machine that you use for work is a non-starter. Otherwise, Ubuntu and Debian have their uses for hosting websites while other Linux distributions and UNIX systems get checked out from time to time.

Using a variant of Debian’s Iceweasel that keeps pace with Firefox

5th February 2013Left to its own devices, Debian will leave you with an ever ageing re-branded version of Firefox that was installed at the same time as the rest of the operating system. From what I have found, the main cause of this was that Mozilla’s wanting to retain control of its branding and trademarks in a manner not in keeping with Debian’s Free Software rules. This didn’t affect just Firefox but also Thunderbird, Sunbird and Seamonkey with Debian’s equivalents for these being IceDove, IceOwl and IceApe, respectively.

While you can download a tarball of Firefox from the web and use that, it’d be nice to get a variant that updated through Debian’s normal apt-get channels. In fact, IceWeasel does get updated whenever there is a new release of Firefox even if these updates never find their way into the usual repositories. While I have been know to take advantage of the more frozen state of Debian compared with other Linux distributions, I don’t mind getting IceWeasel updated so it isn’t a security worry.

The first step in so doing is to add the following lines to /etc/apt/sources.list using root access (using sudo, gksu or su to assume root privileges) since the file normally cannot be edited by normal users:

deb http://backports.debian.org/debian-backports squeeze-backports main

deb http://mozilla.debian.net/ squeeze-backports iceweasel-release

With the file updated and saved, the next step is to update the repositories on your machine using the following command:

sudo apt-get update

With the above complete, it is time to overwrite the existing IceWeasel installation with the latest one using an apt-get command that specifies the squeeze-backports repository as its source using the -t switch. While IceWeasel is installed from the iceweasel-release squeeze-backports repository, there dependencies that need to be satisfied and these come from the main squeeze-backports one. The actual command used is below:

sudo apt-get install -t squeeze-backports iceweasel

While that was all that I needed to do to get IceWeasel 18.0.1 in place, some may need the pkg-mozilla-archive-keyring package installed too. For those needing more information that what’s here, there’s always the Debian Mozilla team.

Office 2007 on test…

23rd January 2007With its imminent launch and having had a quick at one of its beta releases, I decided to give Office 2007 a longer look after it reached its final guise. This is courtesy of the demonstration version that can be downloaded from Microsoft’s website; I snagged Office Standard which contains Word, Excel, PowerPoint and Outlook. Very generously, the trial version that I am using gives me until the end of March to come to my final decision.

And what are my impressions? Outlook, the application from the suite that I most use, has changed dramatically since Outlook 2002, the version that I have been using. Unless you open up an email in full screen mode, the ribbon interface so prevalent in other members of the Office family doesn’t make much of an appearance here. The three-paned interface taken forward from Outlook 2003 is easy to get around. I especially like the ability to collapse/expand a list of emails from a particular sender: it really cuts down on clutter. The ZoneAlarm anti-spam plug-in on my system was accepted without any complaint as were all of my PST files. One thing that needed redoing was the IMAP connection to my FastMail webmail account but that was driven more by Outlook warning messages than by necessity from a user experience point of view. I have still to get my Hotmail account going but I lost that connection when still using Outlook 2002 and after I upgraded to IE7.

What do I make of the ribbon interface? As I have said above, Outlook is not pervaded by the new interface paradigm until you open up an email. Nevertheless, I have had a short encounter with Word 2007 and am convinced that the new interface works well. It didn’t take me long to find my way around at all. In fact, I think that they have made a great job of the new main menu triggered by the Office Button (as Microsoft call it) and got all sorts of things in there; the list includes Word options, expanded options for saving files (including the new docx file format, of course, but the doc format has not been discarded either) and a publishing capability that includes popular blogs (WordPress.com, for instance) together with document management servers. Additionally, the new zoom control on the bottom right-hand corner is much nicer than the old drop down menu. As regards the “ribbon”, this is an extension of the tabbed interfaces seen in other applications like Adobe HomeSite and Adobe Dreamweaver, the difference being that the tabs are only place where any function is found because there is no menu back up. There is an Add-ins tab that captures plug-ins to things like Adobe Distiller for PDF creation. Macromedia in its pre-Adobe days offered FlashPaper for doing the same thing and this seems to function without a hitch in Word 2007. Right-clicking on any word in your document not only gives you suggested corrections to misspellings but also synonyms (no more Shift-F7 for the thesaurus, though it is still there is you need it) and enhanced on-the-spot formatting options. A miniature formatting menu even appears beside the expected context menu; I must admit that I found that a little annoying at the beginning but I suppose that I will learn to get used to it.

My use of Outlook and Word will continue, the latter’s blogging feature is very nice, but I haven’t had reason to look at Excel or PowerPoint in detail thus far. From what I have seen, the ribbon interface pervades in those applications too. Even so, my impressions the latest Office are very favourable. The interface overhaul may be radical but it does work. Their changing the file formats is a more subtle change but it does mean that users of previous Office versions will need the converter tool in order for document sharing to continue. Office 97 was the last time when we had to cope with that and it didn’t seem to cause the world to grind to a halt.

Will I upgrade? I have to say that it is very likely given what is available in Office Home and Student edition. That version misses out on having Outlook but the prices mean that even buying Outlook standalone to compliment what it offers remains a sensible financial option. Taking a look at the retail prices on dabs.com confirms the point:

Office Home and Student Edition: £94.61

Office Standard Edition: £285.50

Office Standard Edition Upgrade: £175.96

Outlook 2007: £77.98

Having full version software for the price of an upgrade sounds good to me and it is likely to be the route that I take, if I replace the Office XP Standard Edition installation that has been my mainstay over the last few years. Having been on a Windows 95 > Windows 98 > Windows 98 SE > Windows ME upgrade treadmill and endured the hell raised when reinstallation becomes unavoidable, the full product approach to getting the latest software appeals to me over the upgrade pathway. In fact, I bought Windows XP Professional as the full product in order to start afresh after moving on from Windows 9x.

A bigger screen?

23rd February 2010A recent bit of thinking has caused me to cast my mind back over all the screens that have sat in front of me while working with computers over the years. Well, things have come a long way from the spare television that I used with a Commodore 64 that I occasionally got to exploring the thing. Needless to say, a variety of dedicated CRT screens ensued as I started to make use of Apple and IBM compatible PC’s provided in computing labs and other such places before I bought an example of the latter as my first ever PC of my own. That sported a 15″ display that stood out a little in times when 14″ ones were mainstream but a 17″ Iiyama followed it when its operational quality deteriorated. That Iiyama came south with me from Edinburgh as I moved to where the work was and offered sterling service before it too started to succumb to aging.

During the time that the Iiyama CRT screen was my mainstay at home, there were changes afoot in the world of computer displays. A weighty 21″ Philips screen was what greeted me on a first day at work but 21″ Eizo LCD displays were set to replace those behemoths and remain in use as if to prove the longevity of LCD panels and the validity of using what had been sufficient for laptops for a decade or so. In fact, the same comment regarding reliability applies to the screen that now is what I use at home, a 17″ Iiyama LCD panel (yes, I stuck with the same brand when I changed technologies longer ago than I like to remember).

However, that hasn’t stopped me wondering about my display needs and it’s screen size that is making me think rather than the reliability of the current panel. That is a reflection on how my home computing needs have changed over time and they show how my non-computing interests have evolved too. Photography is but one of these and the move the digital capture has brought with a greater deal of image processing, so much that I wonder if I need to make less photos rather than bringing home so many that it can be hard to pick out the ones that are deserving of a wider viewing. That is but one area where a bigger screen would help but there is another and it arises from my interest in exploring countryside on foot or on my bike: digital mapping. When planning outings, it would be nice to have a wider field of view to be able to see more at a larger scale.

None of the above is a showstopper that would be the case if the screen itself was unreliable so I am going to take my time on this one. The prospect of sharing desktops across two screens is another idea but that needs some thought about where it all would fit; the room that I have set aside for working at my computer isn’t the largest but it’ll need to do. After the space side of things, then there’s the matter of setting up the hardware. Quite how a dual display is going to work with a KVM setup is something to explore as is the adding of extra video cards to existing machines. After the hardware fiddling, the software side of things is not a concern that I have because of when I used laptop as my main machine for a while last year. That confirmed that Windows (Vista but it has been possible since 2000 anyway…) and Ubuntu (other modern Linux distributions should work too…) can cope with desktop sharing out of the box.

Apart from the nice thoughts of having more desktop space, the other tempting side to all of this is what you can get for not much outlay. It isn’t impossible to get a 22″ display for less than £200 and the prices for 24″ ones are tempting too. That’s a far cry from paying next to £300 (if my memory serves me correctly) for that 17″ Iiyama and I’d hope that the quality is as good as ever.

It’s all very well talking about pricing but you need to sit down and choose a make and model when you get to deciding on a purchase. There is plenty of choice so that would take a while but magazine reviews will come in handy here. Saying that, last year’s computing misadventures have me questioning the sense of going for what a magazine places on its A-list. They also have me minded to go to a nearby computer shop to make a purchase rather than choosing a supplier on the web; it is easier to take back a faulty unit if you don’t have far to go. Speaking of faulty units, last year has left me contemplating waiting until the year is older before making any acquisitions of computer kit. All of that has put the idea of buying a new screen on the low priority list, nice to have but not essential. For now, that is where it stays but you never know what the attractions of a shiny new thing can do…

Adding a new hard drive to Ubuntu

19th January 2009This is a subject that I thought that I had discussed on this blog before but I can’t seem to find any reference to it now. I have discussed the subject of adding hard drives to Windows machines a while back so that might explain what I was under the impression that I was. Of course, there’s always the possibility that I can’t find things on my own blog but I’ll go through the process.

What has brought all of this about was the rate at which digital images were filling my hard disks. Even with some housekeeping, I could only foresee the collection growing so I went and ordered a 1TB Western Digital Caviar Green Power from Misco. City Link did the honours with the delivery and I can credit their customer service with regard to organising delivery without my needing to get to the depot to collect the thing; it was a refreshing experience that left me pleasantly surprised.

For the most of the time, hard drives that I have had generally got on with the job there was one experience that has left me wary. Assured by good reviews, I went and got myself an IBM DeskStar and its reliability didn’t fill me with confidence and I will not touch their Hitachi equivalents because of it (IBM sold their hard drive business to Hitachi). This was a period in time when I had a hardware faltering on me with an Asus motherboard putting me off that brand around the same time as well (I now blame it for going through a succession of AMD Athlon CPU’s). The result is that I have a tendency to go for brands that I can trust from personal experience and both Western Digital falls into this category (as does Gigabyte for motherboards), hence my going for a WD this time around. That’s not to say that other hard drive makers wouldn’t satisfy my needs since I have had no problems with disks from Maxtor or Samsung but Ill stick with those makers that I know until they leave me down, something that I hope never happens.

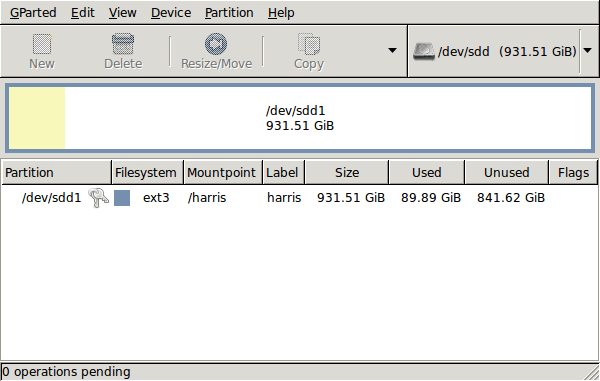

GParted running on Ubuntu

Anyway, let’s get back to installing the hard drive. The physical side of the business was the usual shuffle within the PC to add the SATA drive before starting up Ubuntu. From there, it was a matter of firing up GParted (System -> Administration -> Partition Editor on the menus if you already have it installed). The next step was to find the new empty drive and create a partition table on it. At this point, I selected msdos from the menu before proceeding to set up a single ext3 partition on the drive. You need to select Edit -> Apply All Operations from the menus set things into motion before sitting back and waiting for GParted to do its thing.

After the GParted activities, the next task is to set up automounting for the drive so that it is available every time that Ubuntu starts up. The first thing to be done is to create the folder that will be the mount point for your new drive, /newdrive in this example. This involves editing /etc/fstab with superuser access to add a line like the following with the correct UUID for your situation:

UUID=”32cf775f-9d3d-4c66-b943-bad96049da53″ /newdrive ext3 defaults,noatime,errors=remount-ro

You can can also add a comment like “# /dev/sdd1” above that so that you know what’s what in the future. To get the actual UUID that you need to add to fstab, issue a command like one of those below, changing /dev/sdd1 to what is right for you:

sudo vol_id /dev/sdd1 | grep “UUID=” /* Older Ubuntu versions */

sudo blkid /dev/sdd1 | grep “UUID=” /* Newer Ubuntu versions */

This is the sort of thing that you get back and the part beyond the “=” is what you need:

ID_FS_UUID=32cf775f-9d3d-4c66-b943-bad96049da53

Once all of this has been done, a reboot is in order and you then need to set up folder permissions as required before you can use the drive. This part gets me firing up Nautilus using gksu and adding myself to the user group in the Permissions tab of the Properties dialogue for the mount point (/newdrive, for example). After that, I issued something akin to the following command to set global permissions:

chmod 775 /newdrive

With that, I had completed what I needed to do to get the WD drive going under Ubuntu. After that IBM DeskStar experience, the new drive remains on probation but moving some non-essential things on there has allowed me to free some space elsewhere and carry out a reorganisation. Further consolidation will follow but I hope that the new 931.51 GiB (binary gigabytes or 1024*1024*1024 rather the decimal gigabytes (1,000,000,000) preferred by hard disk manufacturers) will keep me going for a good while before I need to add extra space again.

Why all the commas?

4th December 2022In recent times, I have been making use of Grammarly for proofreading what I write for online consumption. That has applied as much to what I write in Markdown form as it has for what is authored using content management systems like WordPress and Textpattern.

The free version does nag you to upgrade to a paid subscription, but is not my main irritation. That would be its inflexibility because you cannot turn off rules that you think intrusive, at least in the free version. This comment is particularly applicable to the unofficial plugin that you can install in Visual Studio Code. To me, the add-on for Firefox feels less scrupulous.

There are other options though, and one that I have encountered is LanguageTool. This also offers a Firefox add-on, but there are others not only for other browsers but also Microsoft Word. Recent versions of LibreOffice Writer can connect to a LanguageTool server using in-built functionality, too. There are also dedicated editors for iOS, macOS or Windows.

The one operating that does not get specific add-on support is Linux, but there is another option there. That uses an embedded HTTP server that I installed using Homebrew and set to start automatically using cron. This really helps when using the LanguageTool Linter extension in Visual Studio Code because it can connect to that instead of the public API, which bans your IP address if you overuse it. The extension is also configurable with the ability to add exceptions (both grammatical and spelling), though I appear to have enabled smart formatting only to have it mess up quotes in a Markdown file that then caused Hugo rendering to fail.

Like Grammarly, there is an online editor that offers more if you choose an annual subscription. That is cheaper than the one from Grammarly, so that caused me to go for that instead to get rephrasing suggestions both in the online editor and through a browser add-on. It is better not to get nagged all the time…

The title may surprise you, but I have been using co-ordinating conjunctions without commas for as long as I can remember. Both Grammarly and LanguageTool pick up on these, so I had to do some investigation to find a gap in my education, especially since LanguageTool is so good at finding them. What I also found is how repetitive my writing style can be, which also means that rephrasing has been needed. That, after all, is the point of a proofreading tool, and it can rankle if you have fixed opinions about grammar or enjoy creative writing.

Putting some off-copyright texts from other authors triggers all kinds of messages, but you just have to ignore these. Turning off checks needs care, even if turning them on again is easy to do. There, however, is the danger that artificial intelligence tools could make writing too uniform, since there is only so much that these technologies can do. They should make you look at your text more intently, though, which is never a bad thing because computers still struggle with meaning.